Using Cloudflare Tunnels in Kubernetes Clusters

Introduction: Why Connectivity Matters

Resilient networking is fundamental to reliable applications. Kubernetes makes it easy to deploy microservices, but each public endpoint increases exposure to the wider internet. Administrators patch gateways, configure firewalls, and combat DDoS attacks just to keep services reachable. As applications expand across multiple clusters and regions, finding efficient routes for user traffic becomes a serious challenge. High latency can frustrate customers and hinder real-time workloads. Cloudflare Tunnels address these concerns by reversing the connection model. Instead of accepting inbound traffic on a public address, the cluster establishes an outbound link to Cloudflare. From the user’s perspective, the service still appears at a stable domain, yet the underlying infrastructure is hidden behind Cloudflare’s robust edge.

By shifting to this approach, teams no longer worry about running external load balancers or exposing nodes directly to the internet. They gain Cloudflare’s DDoS protection and can leverage the network’s vast global footprint to deliver content with fewer hops. This modern architecture provides both speed and security, a compelling combination for anyone operating sensitive or latency‑critical workloads in Kubernetes.

What Are Cloudflare Tunnels?

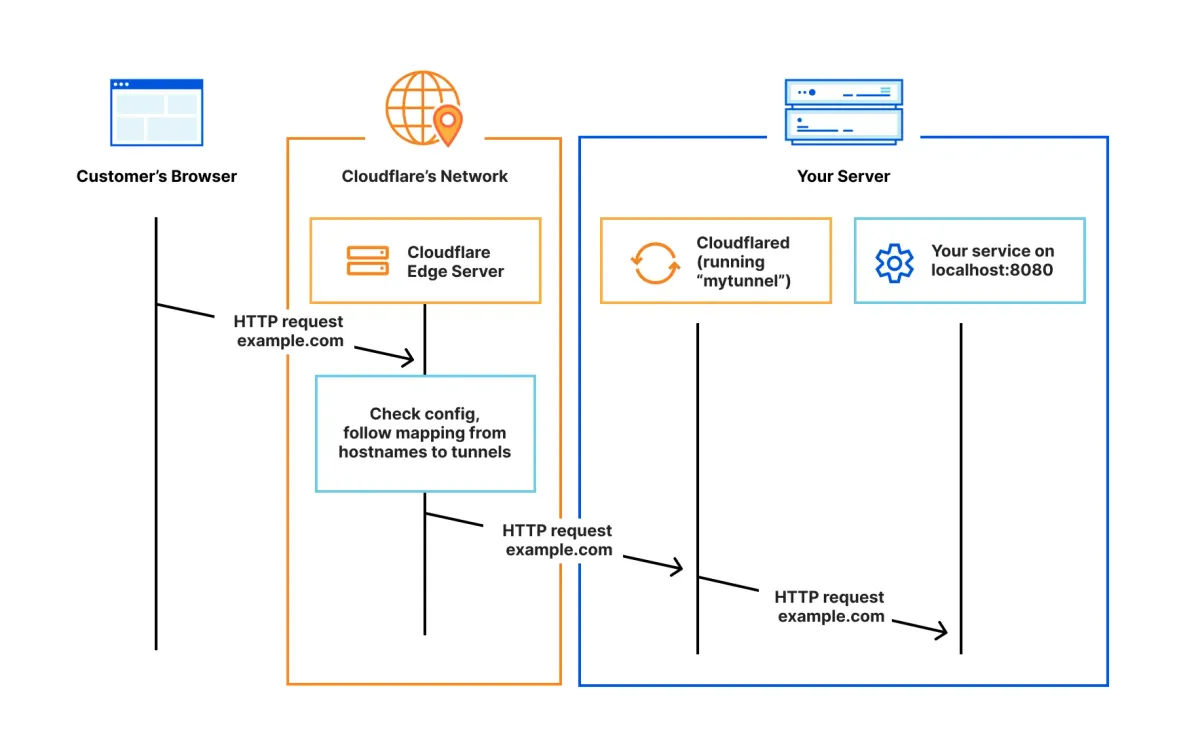

Cloudflare Tunnels rely on a lightweight process called cloudflared. Once the agent starts, it authenticates with your Cloudflare account and opens a persistent WebSocket connection to the nearest Cloudflare data center. All requests to your domain first touch Cloudflare’s edge. They are then transported through this tunnel back to your cluster. Because the connection originates from inside your infrastructure, there is no need to open inbound ports or assign public IP addresses. The edge can perform TLS termination, apply security policies, and even integrate with Cloudflare Access for user authentication. In effect, the tunnel turns Cloudflare into an external ingress controller that never exposes the underlying pod or node addresses.

Kubernetes Networking Basics

Kubernetes typically exposes services with NodePorts, load balancers, or Ingress resources. These mechanisms allocate public endpoints so clients can reach your applications. While straightforward, the public nature of those endpoints invites traffic from anywhere. Intrusion attempts, vulnerability scans, and DDoS attacks are inevitable. Operators add WAFs or network policies, but the risk remains because the services are accessible on the open internet. Additionally, global users often connect through multiple ISPs and networks before arriving at your cluster, adding latency and jitter. Cloudflare Tunnels mitigate both problems by hiding the cluster and routing requests across Cloudflare’s high-speed backbone.

Integrating Cloudflare Tunnels with Kubernetes

To integrate Cloudflare Tunnels, you create a tunnel in the Cloudflare dashboard and download the associated credentials file. Store these credentials in a Kubernetes secret. Next, deploy cloudflared as a sidecar container or standalone pod. The container connects to Cloudflare automatically and advertises the internal services you want to expose. Configuration can map several hostnames or paths to different Service ports within the cluster. Traffic destined for api.alex.rocks might route to a backend deployment, while docs.alex.rocks could map to a separate service. Because everything runs over a single outbound connection, you reduce the need for multiple load balancers and simplify network topology. Cloudflare automatically balances traffic across multiple cloudflared instances, providing resilience if one pod restarts or a node fails.

Latency Benefits: Leveraging Cloudflare’s Global Network

Cloudflare operates data centers in hundreds of cities. When a user sends a request, it typically hits the nearest Cloudflare edge within milliseconds. From there, Cloudflare’s private backbone carries the traffic to the tunnel endpoint with minimal additional hops. This optimized route can be significantly faster than traversing the public internet to a distant load balancer. Static assets can be cached at the edge, and features such as Argo Smart Routing identify the quickest path across the network. For global businesses, the reduction in latency is tangible, pages load faster, API responses arrive sooner, and real-time applications feel more responsive regardless of where users connect from.

Security Advantages: Private Clusters Without Public Ingress

Eliminating public ingress drastically limits exposure. Attackers scanning IP ranges will not find open ports or accessible endpoints, making your cluster an unattractive target. Cloudflare’s edge filters malicious traffic, blocks common exploits, and absorbs volumetric DDoS attacks before packets reach your infrastructure. With Cloudflare Access, you can define identity-based policies, requiring users to authenticate through SSO providers before they are allowed through the tunnel. This means unauthorized requests never leave Cloudflare’s network, reducing the attack surface to near zero. Compliance audits become simpler because there is no publicly reachable service to inspect. Teams can also restrict egress from the cluster, further containing lateral movement risks in the event of a breach elsewhere.

Setting Up Cloudflare Tunnels in a Cluster

After creating a tunnel and saving its credentials, deploy the cloudflared container. A typical configuration specifies a local port, the target service, and the hostname clients will use. Because the tunnel originates from within the cluster, it works from private networks or behind NAT gateways without opening firewall rules. To ensure reliability, run multiple cloudflared replicas. Cloudflare automatically distributes traffic between them, so upgrades or failures do not disrupt access. Administrators can manage mappings via configuration files committed to version control, allowing changes to be audited like any other infrastructure-as-code setup.

Operational Considerations: Scaling, Monitoring, and Troubleshooting

Operating tunnels at scale requires monitoring. The cloudflared agent exports Prometheus metrics, such as connection status, requests, and error rates, which you can scrape with tools like Prometheus and visualize in Grafana. Cloudflare’s dashboard complements these metrics with logs and threat analytics, highlighting blocked attacks or latency trends. During troubleshooting, verify that DNS records point to the correct tunnel and that pods can establish outbound connections to Cloudflare on port 7844 or 443. If latency spikes occur, check Cloudflare’s analytics to see whether traffic is routed optimally and confirm that your tunnel replicas are healthy. Periodically rotating tunnel credentials also reduces the risk of compromised tokens.

Case Study: Latency and Security Improvements

A hypothetical SaaS provider hosts its main Kubernetes cluster in Frankfurt. Customers across North America, Europe, and Asia rely on the service daily. Initially, they used a standard public load balancer. While functional, transatlantic latency for North American customers hovered around 150 milliseconds. After adopting Cloudflare Tunnels, those users connected to Cloudflare’s edge in New York or Chicago, from which traffic traveled through Cloudflare’s backbone to Frankfurt. Latency dropped by about 40 milliseconds, giving users a noticeably snappier experience. Security audits also improved. Since removing the public load balancer, the company saw fewer intrusion attempts, and compliance reviewers were pleased that no public endpoints existed on the cluster itself.

Best Practices and Lessons Learned

Automate tunnel deployment with Helm charts or Terraform to avoid configuration drift. Combine tunnels with Cloudflare Access policies to enforce identity checks at the edge. Monitor the health of each cloudflared instance, and set up alerts for disconnects. Perform periodic failover tests by stopping an agent and verifying that others seamlessly handle traffic. Keep credentials in a secure secrets manager and rotate them according to your organization’s security policies. Finally, review your cloud provider’s egress costs, as tunneled traffic counts toward outbound bandwidth.

Final Thoughts

Cloudflare Tunnels provide an elegant way to expose Kubernetes services without the risk of public ingress. By shifting traffic through Cloudflare’s network, you minimize attack vectors while often improving response times for users around the world. The simplicity of deploying a small agent and the power of Cloudflare’s global edge make tunnels an attractive option for teams focused on security and performance. With thoughtful monitoring and automated management, Cloudflare Tunnels can become a reliable cornerstone of your cloud-native architecture, delivering both peace of mind and a smoother experience for your customers.